Feedback makes your training programs stronger. You should be looking for constructive feedback from learners on the courses you offer them. It’s the best way to improve. It’s important to ask the right questions on your course for a successful evaluation. By focusing on features and performance that matter, you can make corrections and improvements easily.

Learners who take the courses will give the most critical feedback, but other experts should weigh in too. Experts, such as subject matter experts, see courses in a different way from learners. They will look at training content at a deeper level to find errors and spark ideas for improvement.

If you’re at the point where you want to know if your training materials are measuring up, read on for more tips on how to gather feedback and what to do with it once you have it.

Making Evaluations Part of A Successful Course

Evaluations should be part of every course you offer no matter what. As tempting as it is to call a project “done” and stop soliciting feedback, don’t skip this step.

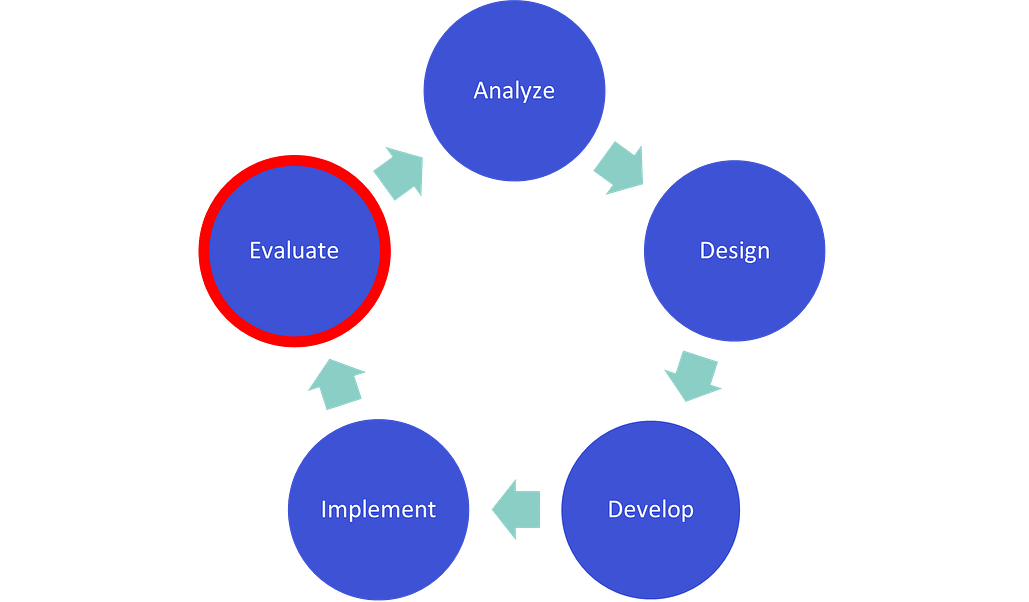

If you are following the development methodology called ADDIE, that makes it easy to include evaluation in every cycle.

ADDIE is an acronym that stands for Analyze, Design, Develop, Implement, and Evaluate. ADDIE is a model in instructional design that can help think about and plan a course design. While it seems rigid, the model can be adapted for many projects and can be adapted depending on what kinds of materials you already have.

The ADDIE blueprint provides the structure for many online course projects.

The “E” in “Evaluate” is what happens as the final stage:

The ADDIE model recommends course evaluation be done in two parts:

- Formative evaluation

- Summative evaluation

Formative evaluation should happen while you’re developing the course. It’s designed to make sure you’re meeting all the learning objectives you set out to meet.

Summative evaluation happens at the end. This is for when the course is done and you’re looking for improvement suggestions.

The area of evaluation in professional instructional design is very complex and will depend on your program and goals. You’ll ideally enter the evaluation stage with a plan that spells out how the training program will be reviewed and tested and another plan that includes specific review criteria.

However, anyone working on a training project can apply these evaluation principles to their project as a starting point. It will help make any course successful.

Evaluating

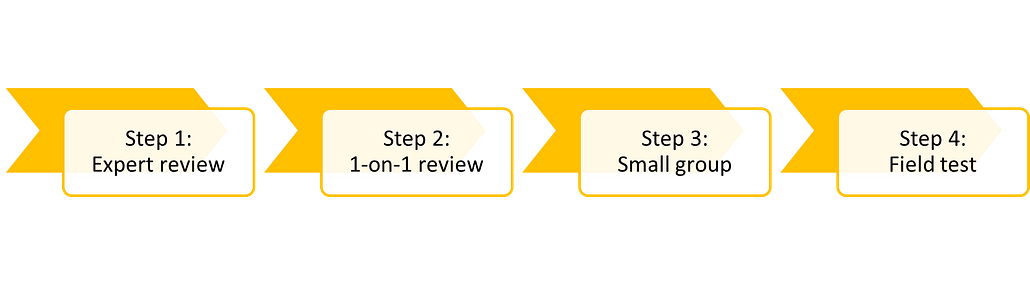

Let’s have a closer look at what formative evaluation means and what kinds of steps you should follow if you want to make sure your training is on the right track.

Step 1: Expert review

First, have an expert look at the curriculum you’re creating. An expert will be familiar with specifics a learner won’t, such as health recommendations, best practices, or common misconceptions. They’ll be able to gauge the accuracy of what you’re creating.

An expert review could involve one person or more. An expert could be:

- An instructional designer, who understands how to design and deliver educational material

- A subject-matter expert, who knows the material well

- A technology expert, who can evaluate the success of the delivery system (e.g., learning management system)

Step 2: One-on-one review

Next, have a learner look at the material. A learner will be able to review the materials in a different way from an expert. Whereas an expert will have a deeper understanding of the mechanics and material, they could take other areas for granted.

A learner will spot errors and inconsistencies that someone very familiar with the content could miss. The learner reviewer should be looking for clarity, ease of use, errors, and general feedback. After all, a successful learning experience should be a priority for any course.

The one-on-one review requires just one learner. This isn’t the same as releasing the content to a pilot group for a dress rehearsal. An evaluator or someone to take notes can be in the room to capture feedback.

If you like, you can make updates and have another learner walk through the material, but just one at a time.

Step 3: Small group review

The next step after sitting down with one learner at a time—and making updates to the content based on their feedback—is to assemble a small group.

A small group of learners is like a focus group. The group is guided through the whole training. An evaluator, such as an instructional designer, should facilitate the discussion and make notes. This should be as close as possible to a real learning scenario, but with the intention of gathering more feedback at the end.

Here are some important factors to consider with a small group review:

- Collection method. A written questionnaire, videoing or note-taking are common methods.

- Ask questions that support improvements. Asking questions such as, “How did the training meet your expectations?” might be interesting, but they don’t really provide specifics about how to improve. Choose your questions carefully.

- Ask questions before and after. Measure confidence in completing tasks related to the training by asking questions before the training and afterward. Then, you can measure how well people feel they improved by the time they finished.

Step 4: Field test

The field test is a real-world trial or a pilot. The training material should be as perfect as you can get it, and then you offer it to a limited group to see how it goes.

The field test and the small group review are very similar. However, you want to ask questions about improvements you made after the small group review. It might also involve a larger group, up to 30 people.

Once you go through each step of these evaluations, you’ll be in a great position to find out if your course will be successful. Following this process means making tweaks is much easier and less costly. A little preparation, careful planning means better projects that accomplish your program training goals the way they were intended.